Prompting is a heuristic

Prompt engineering pertains to the various methods and strategies designed and implemented in order to effectively "communicate" with a large language model. The goal is to encourage a certain desired behavior from the model without tweaking its parameters.

Prompt engineering depends on the model, its specificity, and the desired behavior you want it to get to. Thus, "good prompts" can be achieved through extensive experimentation.

There exists different types of prompting:

It is also very important to understand the limitations of language models in order to safely build, tune, prompt or use them. Accordingly, a following article will be dedicated to such matters.

This article will be updated incrementally.

Zero-shot prompting

Zero-shot prompting basically means that a model is able to perform a task, without you having to provide any preparatory examples.

Here is an example:

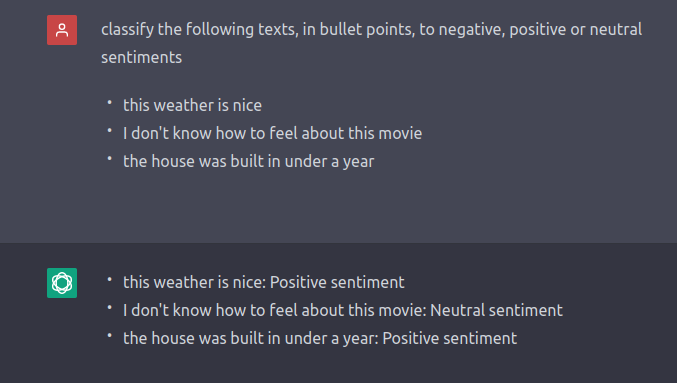

Screenshot: GPT sentiment analysis task 14/09/2023

The screenshot above (taken on the 14th of September) shows how GPT struggles sometimes to classify some inputs (the third text for example should have been classified as neutral given that it is factual and doesn't describe a particular inclination.)

But you get the gist of it: give it a task, don't give any instructions, and wait for it to give you a result.

Few-shot prompting

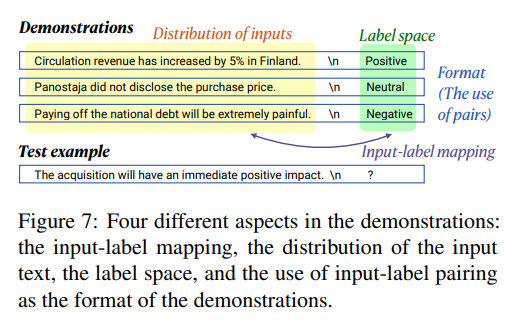

This type of prompting consists of giving the model several desired input-output combinations to steer it to the desired behavior.

Figure showing an example of few shots prompting (source: arXiv:2202.12837)

Tips and Tricks for effective few-shot prompts :

- Add labels to the demonstrations

- Write your demonstrations in a similar style and syntax to the desired output

- Try demonstrations with high entropy

Chain-of-thought (CoT) prompting

As described in their paper, Wei et al. 2022 explain that "few chain of thought demonstrations are provided as exemplars in prompting."

Instead of providing the model with input-output pairs, the idea is to provide it with a prompt consisting of input-CoT-output triples.

Chain-of-thought prompting is highly beneficial for tasks that involve reasoning, such as arithmetic, commonsense and symbolic reasoning tasks.

Figure : Standard prompting vs chain-of-thought prompting (source: arXiv:2201.11903)

Tips and Tricks for effective prompts :

- CoT prompting is shown to be effective with large-scale models (more than 100B parameters)

- "Prompts with higher reasoning complexity, i.e., chains with more reasoning steps, achieve substantially better performance on multi-step reasoning tasks over strong baselines." (Fu et al. 2023)

ReAct: Reasoning and Acting

ReAct attempts to merge two capabilities : reasoning and acting.

CoT prompting demonstrates how LLMs can be steered to perform well on reasoning tasks, while other LLMs have been trained and/or fine-tuned to perform on action tasks (e.g. WebGPT)

Retrieval Augmented Generation (RAG)

Introduced in 2021, retrieval augmented generation addresses the challenge of having the model access external data without having to retrain it.

This method gained a lot of popularity because users can blend their own retrieved content with prompts and thus, steer LLMs to perform better on their user-specific data sources.

An example of this is developing QA applications that can provide better answers consistent with a given context.

Langchain provides different RAG modules (loaders, indexers, transformers, retrievers...etc)

Sources & References

[1] Yao Fu, Hao Peng, Ashish Sabharwal, Peter Clark, and Tushar Khot. Complexity-based prompting for multi-step reasoning. arXiv preprint arXiv:2210.00720, 2022.

[2] Grégoire Mialon, Roberto Dessı̀, Maria Lomeli, Christoforos Nalmpantis, Ram Pasunuru, Roberta Raileanu, Baptiste Rozière, Timo Schick, Jane Dwivedi-Yu, Asli Celikyilmaz, Edouard Grave, Yann LeCun, and Thomas Scialom. Augmented language models: a survey, 2023.

[3] OpenAI. Openai cookbook, 2023. URL https://cookbook.openai.com/. Last accessed 28 November 2023.

[4] Jason Wei, Xuezhi Wang, Dale Schuurmans, Maarten Bosma, Fei Xia, Ed Chi, Quoc V Le, Denny Zhou, et al. Chain-f-thought prompting elicits reasoning in large language models. Advances in Neural Information Processing Systems, 35:24824–24837, 2022.

[5] Shunyu Yao, Jeffrey Zhao, Dian Yu, Nan Du, Izhak Shafran, Karthik Narasimhan, and Yuan Cao. React: Synergizing reasoning and acting in language models. arXiv preprint arXiv:2210.03629, 2022.

Prompt Engineering